The POTUS signed a sweeping executive order regulating the development of artificial intelligence.

Photo: Getty Images

Biden signed a sweeping executive order regulating the development of artificial intelligence, saying he wants to prevent AI from making social media “more addictive” or from abetting fraud.

Biden noted that he’s seen a “deep fake” video of himself, using a term for the increasingly prevalent and convincing doctored videos.

His concerns sharpened after he watched Tom Cruise’s latest action-packed thriller, according to White House deputy chief of staff Bruce Reed.

“If [Biden] hadn’t already been concerned about what could go wrong with AI before that movie, he saw plenty more to worry about,” Reed said.

Reed said he watched the film with Biden at Camp David, following its release in July earlier this year.

President Joe Biden’s concerns over artificial intelligence intensified after watching “Mission: Impossible – Dead Reckoning Part One,” according to the White House.

These AI features are known to be a concern for Biden, who Reed said has been “profoundly curious about the technology” of AI.

Biden had witnessed AI technology create “fake AI images of himself [and] of his dog”, and had seen it perform “the incredible and terrifying technology of voice cloning,” Reed added.

“[AI] can take three seconds of your voice and turn it into an entire fake conversation.”

The complex, rapidly evolving field of artificial intelligence raises legal, national security and civil rights concerns that can’t be ignored, notes Bloomberg.

Governments don’t have a great track record of keeping up with emerging technology. But the complex, rapidly evolving field of artificial intelligence raises legal, national security and civil rights concerns that can’t be ignored. Some US cities and states have already passed legislation limiting use of AI in areas such as police investigations and hiring, and the European Union has proposed a sweeping law that would put guardrails on the technology. While the US Congress works on legislation, President Joe Biden is directing government agencies to vet future AI products for potential national or economic security risks.

Why does AI need regulating?

Already at work in products as diverse as toothbrushes and drones, systems based on AI have the potential to revolutionize industries from health care to logistics. But replacing human judgment with machine learning carries risks. Even if the ultimate worry — fast-learning AI systems going rogue and trying to destroy humanity — remains in the realm of fiction, there already are concerns that bots doing the work of people can spread misinformation, amplify bias, corrupt the integrity of tests and violate people’s privacy. Reliance on facial recognition technology, which uses AI, has already led to people being falsely accused of crimes. A fake AI photo of an explosion near the Pentagon spread on social media, briefly pushing US stocks lower. Google, Microsoft, IBM and OpenAI have encouraged lawmakers to implement federal oversight of AI, which they say is necessary to guarantee safety.

What’s been done in the US?

Biden’s executive order on AI sets standards on security and privacy protections and builds on voluntary commitments adopted by more than a dozen companies. Members of Congress have shown intense interest in passing laws on AI, which would be more enforceable than the White House effort, but an overriding strategy has yet to emerge. Among more narrowly targeted bills proposed so far, one would prohibit the US government from using an automated system to launch a nuclear weapon without human input; another would require that AI-generated images in political ads be clearly labeled. At least 25 US states considered AI-related legislation in 2023, and 15 passed laws or resolutions, according to the National Conference of State Legislatures. Proposed legislation sought to limit use of AI in employment and insurance decisions, health care, ballot-counting and facial recognition in public settings, among other objectives.

What’s the EU working on?

Building on laws addressing privacy and hate speech, the EU is working out the specifics of an AI Act, to take effect in stages by 2026. It’s the first attempt by a Western government to oversee how developers handle risks and deploy their models. It would ban categories of AI seen as most potentially exploitative or manipulative of the public, such as any system that enables the kind of social scoring used in China, where citizens earn credit based on surveillance of their behavior. Other AI applications deemed of high (but not unacceptable) risk, such as programs used to analyze and rank job candidates, would be subject to rules on disclosure, record-keeping and human oversight. Another focus is on transparency: People would need to be informed when interacting with an AI system, and any AI-generated or manipulated content would need to be flagged.

What do the companies say?

Leading technology companies including Amazon.com, Alphabet, International Business Machines and Salesforce pledged to follow the Biden administration’s voluntary transparency and security standards, including putting new AI products through internal and external tests before their release. In September, Congress summoned tech tycoons including Elon Musk and Bill Gates to advise on its efforts to create a regulatory regime, concludes Bloomberg.

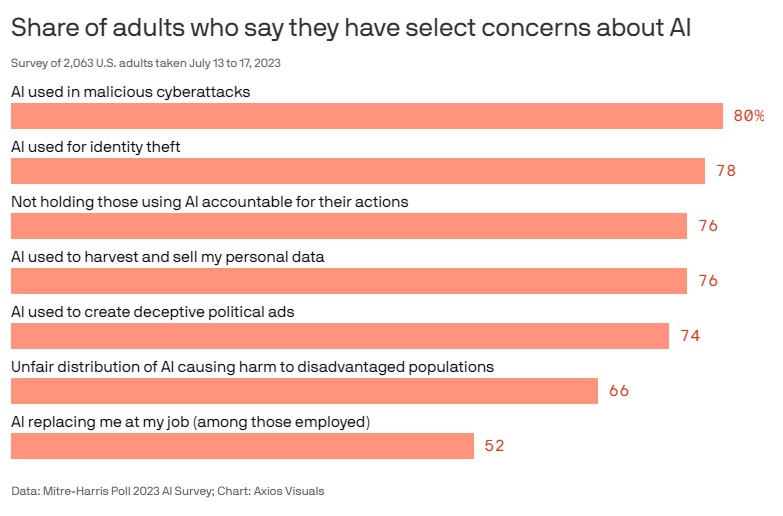

The majority of U.S. adults don't believe the benefits of artificial intelligence outweigh the risks, according to a new Mitre-Harris Poll.

By the numbers: 54% of the 2,063 adults in a Mitre-Harris Poll survey in July said they were more concerned about the risks of AI than they were excited about the potential benefits.

At the same time, 39% of adults said they believed today's AI technologies are safe and secure — down 9 points from the previous survey in November 2022.

Why it matters: AI operators and the tech industry are eyeing new regulations and policy changes to secure their models and mitigate the security and privacy risks associated with them.

The new survey data is some of the first to highlight the growing support for these regulatory efforts.

Respondents were more concerned about AI being used in malicious cyberattacks (80%) and identity theft schemes (78%) than they were about it being used to cause "harm to disadvantaged populations" (66%) or replacing their jobs (52%).

Roughly three-fourths of respondents were also concerned about AI technologies being used to harvest and sell their personal data.

Yes, but: Not all demographics feel the same wariness about AI technologies.

57% of Gen Z respondents and 62% of millennials said they were more excited about the potential benefits of AI than they were worried about the risks.

And men were typically more excited than concerned about AI technologies (51%) than women (40%).

read more in our Telegram-channel https://t.me/The_International_Affairs

11:15 11.11.2023 •

11:15 11.11.2023 •